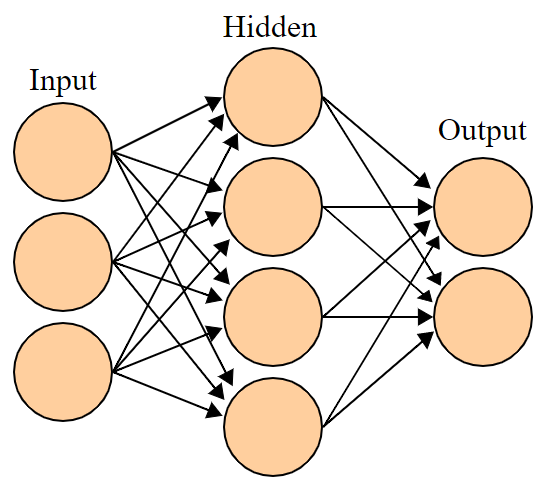

Building your own neural network in python is super easy and fun. Let mw walk you through the steps.

Yes, there are thousands of posts websites which explains the theory of neural networks. Among them I found one post from Michael Nielsen where he clearly explains the theory behind neural-nets and encourages to build one. Thanks to Michael Nielsen for the great tutorial.

Implementation of Neural network from scratch

Firstly import all necessary libraries.

import numpy as np

from statistics import mean

import pandas as pd

import numpy.random as r

from random import randrange

from tqdm import tqdm_notebook as tqdm

from matplotlib import pyplot as pltWe need three important function that are necessary for a ANN to work

def sigmoid(x):

return 1/(1+np.exp(-x))

def sigmoid_prime(x):

return sigmoid(x) * (1-sigmoid(x))

def cost(y_hat, y):

return np.mean([_ * _ for _ in (y_hat - y)])Lets initiate an empty network with desired amount of layers, input size and output size.

def init_net(inputSize, layers, outputSize):

input_size = inputSize

output_size = outputSize

params , metadata ,theta = {},{},{}

metadata["inputSize"] = input_size

metadata["outputSize"] = output_size

metadata["n_layers"] = layers

params["metadata"] = metadata

for idx in range(0, layers):

if idx!=layers-1:

theta["W_" + str(idx)] = r.rand(input_size, input_size)

theta["B_" + str(idx)] = np.array([r.rand(input_size)]).T

else:

theta["W_" + str(idx)] = r.rand(outputSize,input_size)

theta["B_" + str(idx)] = np.array([r.rand(outputSize)]).T

params["theta"] = theta

return paramsThe function forwardPass() is takes input vector and does a linear transformation and finally passes through an activation sigmoid function. Lets define XOR data as an input (X) to the neural network and desired output labels (Y)

X = np.array([

[0, 0],

[0, 1],

[1, 0],

[1, 1],

])

y = np.atleast_2d([0, 1, 1, 0]).TNow pass input data X into forwardPass() function and get result with all weights parameter into a variable “params”

params = forwardPass(X[1],y[1],params)

After a first forward pass we will get first prediction at the output of our neural network. Since the weights are randomly initialized, our prediction must be really far away from ground truth. the difference between the prediction and actual (is called error) is calulated and used to adjust the weights that we randomly assigned in the beginning.

Error= Actual Output – Desired OutputBackpropagation is the essence of neural network training. It is the method of fine-tuning the weights of a neural network based on the error rate obtained in the previous epoch (i.e., iteration). Proper tuning of the weights allows you to reduce error rates and make the model reliable by increasing its generalization.

Backpropagation in neural network is a short form for “backward propagation of errors.” It is a standard method of training artificial neural networks. This method helps calculate the gradient of a loss function with respect to all the weights in the network.

The Back propagation algorithm in neural network computes the gradient of the loss function for a single weight by the chain rule. It efficiently computes one layer at a time, unlike a native direct computation. It computes the gradient, but it does not define how the gradient is used. It generalizes the computation in the delta rule. (source).

def backPropogation(params,lr,update=True, debug=False):

L = params["metadata"]["n_layers"]

params["metadata"]["learningRate"] = lr

finalLayer = True

deltas = {}

d_theta = {}

for l in reversed(range(0,L)):

if finalLayer:

a_prev = params["forwardPass"]["A_prev"+str(l)].reshape(1,-1)

delta = -params["forwardPass"]["error"]*sigmoid_prime(params["forwardPass"]["Z_"+str(l)])

deltas["delta_"+str(l)] = delta

dw = np.matmul(delta,a_prev)

d_theta["d_theta_"+str(l)] = dw

if update:

params["theta"]["W_"+str(l)] -= params["metadata"]["learningRate"]*dw

params["theta"]["B_"+str(l)] -= params["metadata"]["learningRate"]*delta

if debug:

print(delta, dw)

finalLayer = False

else:

a_prev = params["forwardPass"]["A_prev"+str(l)].reshape(1,-1)

w_next = params["theta"]["W_"+str(l+1)].T

delta = np.matmul(w_next,delta)*sigmoid_prime(params["forwardPass"]["Z_"+str(l)])

deltas["delta_"+str(l)] = delta

dw = np.matmul(delta,a_prev)

d_theta["d_theta_"+str(l)] = dw

if update:

params["theta"]["W_"+str(l)] -= params["metadata"]["learningRate"]*dw

params["theta"]["B_"+str(l)] -= params["metadata"]["learningRate"]*delta

if debug:

print(delta)

params["deltas"] = deltas

params["d_theta"] = d_theta

return params

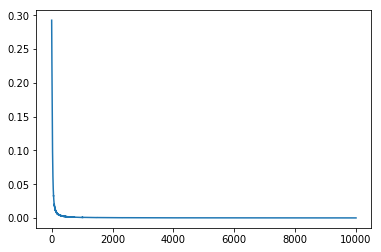

Now we put all together and run forwardPass() and backPropogation() several cycles (also called epochs) and watch how the error is minimizing in other word how our neural network learns the data to accurately predict the out come for an input.

var = init_net(2, 2, 1) #A network with desire number of layers is initialized

epochs = 10000

epoch_error = []

for i in range(0,epochs):

item = randrange(0,3)

var = forwardPass(X[item],y[item],var)

var = backPropogation(var,0.125, debug=False)

c = 0.5*(var["forwardPass"]["error"])**2

epoch_error.append(c)

Plot the error from each epoch.

One response

Thanks for the tutorial. Very helpful